Generative AI and Responsibility – Part 1/2 by Daniele Proverbio

Attention to the Reader: All content produced by the House of Ethics is 100% gpt-free / llm-free. We don’t use any computational tool to write nor analyze our articles or videos.

Generative AI, responsibility, and salaries

One of the hardest questions that firms and regulators may be soon called to answer is “what is a tool?”

For millennials, tools have been identified as inanimate objects, fully subject to active users to fulfill their scope. Knives have no proactiveness whatsoever, and their effects are fully determined by the user. Interestingly, some cultural heritages had already discussed the idea of “objects beyond tools”, mainly holy swords like Excalibur or signed Japanese katanas, believed to possess wills and to actively influence their yielders – or partners.

Since the Enlightment, however, the Western World had only experienced inanimate tools without proactiveness – until recent years.

Whether Artificial Intelligence (AI)-powered devices are mere tools is to be debated. Without question, they are artificial objects. However, they differ from knives in at least two aspects: the extent by which they are considered inanimate, and the extreme personalization to individual users, which can be regarded as a nuanced form of proactiveness. It is not transcendent animism for holy swords, but immanent functions for “smart” devices.

For what concerns the debate on responsibility, rather than establishing if AI devices are animate and proactive, it is necessary to agree that AI devices can be regarded as if they are. In this context, users’ reactions and behavior matter as much as essence. Over the past decade, hundreds of users’ experience reports have shown that, indeed, AI devices are regarded as animate and proactive – examples include affection to home devices or personal chatbots, up to the recent breakthrough of generative AI, which even expert users nowadays employ as first-pass “consultants” for multiple tasks.

There are reasons to believe that such experiences will become more and more common in the near future, due to the technology itself – more pervasiveness, increased quality in receiving and processing environmental inputs including user prompts, faster and more targeted responses, adaptation and personalization to users, and capability to influence users’ behaviors.

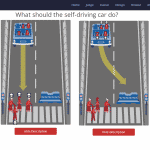

These features are unprecedent if compared to “old-school” tools, thus questioning the very notion of “tool” – and its consequences on responsibility over the whole value and supply chain. Notably, these notions have been already debated in the field of AI when considering autonomous vehicles. The famous “trolley problem” (where a person is asked to divert or not an unstoppable trolley from its trajectory over a kid, at the cost of slamming over three people – and other versions) is a paradigmatic ethical dilemma, regarded as a template for autonomous decisions of self-driving cars. Here, the responsibility issue is immediate, as it involves direct actions on bystanders.

Generative AI

The new generation of AI – generative AI – poses even more subtle nuances. First, they introduced significant change in IT architecture: transformers (a specific technology adding extra layers of meta-training for deep learning models) are possibly even less interpretable than traditional deep learning methods. Second, the scale they operate at is massively bigger: they are trained using millions of parameters (soon billions) over petabytes of data, making overfitting and biases almost certain – somewhere. The scale they may reach is also radically wider: not just thousand rich driver, but billions of internet users. Third, they operate indirectly: contrary to self-driving cars, GPT-enabled chatbots do not take decisions, but provide suggestions and answers – very compelling and credible answers. Apparently, there is in principle no ambiguity in responsibility. After all, browsing websites seems extremely similar – users prompt queries, receive filtered lists of resources, and make their own synthesis. Microsoft Bing is, for instance, marketed as an alternative to web browsing – just another tool.

However, the experience is radically different. Rather than filtering and suggesting content generated by someone else, and providing a list of options to synthesize, genAI is itself providing such synthesis, using ex novo wording, and pulling together an unprecedent amount of information in a single file, all enveloped with human-like text.

Like a piece of advice (GPT-4 suggests recipes from the content of your fridge!), or like the work of a “low-level” consultant.

In a way, a decision (at least over probabilities, by thresholding) was already taken by the algorithm. In addition, to deliver the output, the software either scans its whole database, or it adapts to user’s prompts and style, embedding feedbacks on users’ behavior; in both cases, it goes beyond linearity and directedness of outputs – which is a characteristic of proactiveness. To end with, the generated text is so convincing. Is this still a “traditional” tool?

On responsibility

This ambiguity opens new questions on responsibility.

On the one hand, questions about the position of developers and firms. Are they accountable for what is outputted? To what degree? Is the perspective end-usage part of the equation, similarly to what Einstein and Russel suggested in their manifesto on nuclear power? Or just crafting quality matters, like for “normal” tools, for which constructors are usually responsible for malfunctions? But what is a malfunction in genAI? And how to fix it, since it adapts to billions of users individually? Shall minimum rules be enforced (like a “non nocere” basis, including the ban of, e.g., AI-generated revenge porn)?

On the other hand, there is the responsibility of users. Working with synthesized information, their situation may be regarded as similar to that of “higher level” executives, who are given prompts to make decisions.

In firms, it is their own responsibility to trust the reports or to doublecheck the content (and whoever is in such position is usually paid more, to balance the responsibility burden).

When, for speed and efficiency’s sake, firms encourage employees to perform tasks with genAI assistants, shouldn’t they be framed into the same responsibility transfer scheme? After all, GPT-based text already counts many users having to check for correctness, consistency or references.

As an extreme example, think of the following two situations. In the first one, you are a stagiaire, writing the minutes of an online meeting. You make a slight mistake in your notes, upon which your supervisor needs to plan. According to governance, your supervisor is responsible to ensure that your mistake does not impact the firm’s operations. In the second scenario, you delegate to Microsoft Teams the task of synthesizing the meeting minutes. Since it may misunderstand nuances or biases, the report contains a mistake. Are you in the position of “supervising” the algorithm? Do you meet the standards for a “transfer of responsibility”?

And beyond: can this be applied at all levels, along the whole governance chain and further, through the whole value chain from providers to clients? Do “tokens of extra-responsibility” add up each time a genAI is employed?

Tackling these questions, on top of technological proficiency, will shape thousands of jobs in the future. Not a matter of abstract thinking, they are very practical issues for governance, HR procedures and job rights. We should start addressing these issues now, as the genAI-driven disruption is already happening. And we may agree that, in firms encouraging to use genAI, employees should be paid more.

Part 2/2 by Katja Rausch on “genAI, the “Chain of Accountability”, and gen-ethics”.

Articles by Daniele Proverbio

- Director of Interdisciplinary Research @ House of Ethics / Ethicist and Post-doc researcher /

- Latest posts

Daniele Proverbio holds a PhD in computational sciences for systems biomedicine and complex systems as well as a MBA from Collège des Ingénieurs. He is currently affiliated with the University of Trento and follows scientific and applied mutidisciplinary projects focused on complex systems and AI. Daniele is the co-author of Swarm Ethics™ with Katja Rausch. He is a science divulger and a life enthusiast.

-

In our first article of two, we have challenged traditional normativity and the linear perspective of classical Western ethics. In particular, we have concluded that the traditional bipolar category of descriptive and prescriptive norms needs to be augmented by a third category, syngnostic norms.