A(I)dding Fuel to Fire: How AI accelerates Social Polarization through Misinformation.

AI accelerates the polarization of society through misinformation

As part of the ongoing discussion on artificial intelligence and ChatGPT, concerns have been raised about the potential misuse of AI for the creation and dissemination of misinformation. While AI has the potential to detect, refute, and predict misinformation, the question remains whether AI will become a partner in the heroic fight against misinformation, or whether it will slip out of our control, as social media networks have done.

In this article you will find:

- 4 tips for start-ups using AI-driven disinformation tracking tools

- 3 scandals proving the risks of AI

AI: Between high-risk and the thinking of a 9-year-old child

For years, we have interacted with AI through algorithms on social media, and so far, these algorithms have brought information overload, addiction, societal polarization, QAnon, fake news, deepfake bots, sexualization of children, and more, precisely because it is AI that maximizes engagement on these platforms. In this article, we will explore the relationship between AI and misinformation, looking at both the potential benefits and risks.

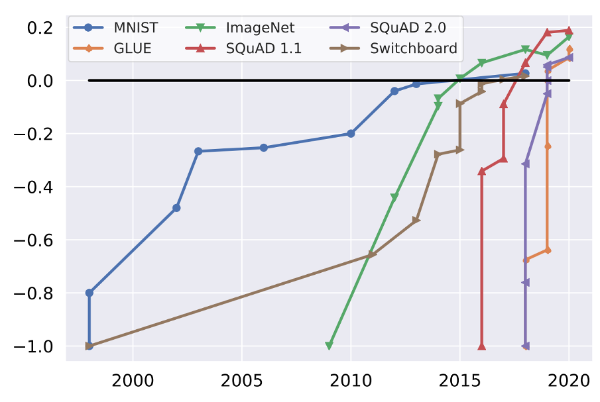

Machine learning surpassed human capacity years ago, as Figure 1 shows (KIELA, Douwe, et al., 2021). A significant year was 2017, when music, image, and voice were connected, previously operating separately. AI researchers have already tested that artificial intelligence connected to the brain can transcribe our imaginations into words or generate images based on what we envision. Through Wi-Fi and radio signal connections, AI can estimate the number of people in a room.

According to experts, current AI technology equals the thinking of a 9-year-old child, who can estimate what others are thinking and show signs of strategic thinking.

Benefits of AI for Combating Misinformation

AI has several potential benefits when it comes to combating misinformation. One of the main advantages of AI is its ability to process large amounts of data quickly and accurately. This means that it can analyze large amounts of information and detect patterns that humans might miss, which can be useful for identifying false information. (Ammar, W. (2020)).

For example, AI can be used to analyze social media posts and detect patterns of behavior that are associated with the spread of misinformation This could include things like the use of certain keywords or phrases, or the frequency with which certain types of content are shared.

By identifying these patterns, AI can help to flag potentially false information before it has a chance to spread widely (Fink, 2021).

The use of AI for monitoring disinformation has existed for years, especially in the field of commercial start-ups. Examples include American companies Yonder and Recorded Future, as well as British company Factmata and French company Storyzy.

These companies offer services such as social intelligence, which uses machine learning to analyze how stories spread across both fringe and mainstream social platforms, and how they influence public opinion.

Additionally, they provide updated blacklists of websites where brands should not advertise due to brand safety concerns.

Another potential benefit of AI for combating misinformation is its ability to identify deepfakes. Deepfakes are videos or images that have been manipulated to create false information, and they can be difficult to detect using traditional methods.

However, AI can be trained to recognize the subtle signs of manipulation that are present in deepfakes, which can help to prevent their spread (Shu, 2021).

Risks of AI for Misinformation

While AI has the potential to be a powerful tool for combating misinformation, it also poses its own risks. One of the main risks is that AI can be trained to generate false information itself. This is known as “adversarial AI,” and it involves training AI algorithms to produce false information that is designed to deceive humans (Tandifonline, 2021).

Concerns about the misuse of artificial intelligence are justified. Let me recall Microsoft, which introduced its chatbot “Tay” back in 2016. Trolls on Twitter taught it racist and xenophobic expressions, forcing the company to shut down the project. Another such scandal occurred this year when AI scammers called hospitals pretending to be the loved ones of patients in need of urgent help.

“This tool is going to be the most powerful tool for spreading misinformation that has ever been on the internet.” according to Gordon Crovitz, co-chief executive of NewsGuard, for New York Times.

Another risk: Bias.

AI algorithms are only as good as the data they are trained on, and if that data is biased, the algorithm will be biased as well.

This can be a particular problem when it comes to issues like race or gender, where biases can be deeply ingrained in the data (Broussard, 2018).

“50% of AI researchers claim that there is at least a 10% chance of AI getting out of control” Tristan Harris co-founder of Center for Humane Technology

Furthermore, there is also the possibility that AI could be used to create even more sophisticated deepfakes, making it even harder to distinguish between real and fake information. This was already seen in America in February of this year when videos spread across the internet as part of a state-sponsored disinformation campaign from pro-Chinese bot accounts on social media.

Aza Raskin sees a negative impact of AI on politics, as he predicts that the last human election will be held in 2024, after which those with bigger computer power will win. AI will enable A-Z testing; infinite testing of content in real-time, which will lead to the manipulation of people during elections.

Social media expert and co-founder of the Center for Humane Technology, Tristan Harris, argues that the arrival of ChatGPT heralds an era of “fake everything,” reality collapse, trust collapse, automated loopholes in the law, automated fake religions, automated lobbying, exponential blackmail, synthetic relationships, and more. Harris sees the automation of pornographic content as a significant threat, as it will only increase addiction to pornography.

Ongoing challenges to cope with social media based on AI-driven algorithms

AI has the potential to be a powerful tool for combating misinformation, but it also poses its own risks. While AI can be used to identify patterns of behavior that are associated with the spread of false information and to detect deepfakes, it can also be used to generate false information itself and can be biased.

So far, society has been unable to cope with social media based on AI-driven algorithms, let alone the upcoming tools and risks of AI. Social media has disrupted the idea of public service media, which are supposed to provide citizens with information about what is happening. As a result, citizens have become subject to algorithms and high-quality information, logically speaking, cannot win the battle for the highest engagement. In the pursuit of dopamine and adrenaline, citizens do not focus on what is essential and important. An exhausted and divided society, amid a pandemic and in a war with Russia and global warming, does not appear ready for the incoming AI.

Sources for article.

-

PhD on Mis/Disinformation at Charles University in Prague

-

Latest article

PhD on Mis/Disinformation at Charles University in Prague

PhD on Mis/Disinformation at Charles University in Prague

Jindřich Oukropec is a PhD. candidate at Charles University in Prague. He has been working for 10 years in marketing and digital communication. In his research he focuses on brands' response to mis/disinformation. He is a co-founder of Brand Safety Academy. At Charles University in Prague Jindřich teaches digital marketing, marketing for non-profit organisations and a seminar for master students Managing a good reputation of a brand in the era of fake news.