About Catastrophes and other Little Disasters

About catastrophes and other little disasters

On the awareness of extreme events

Approaching our complex world, being it described as VUCA (Volatile, Uncertain, Complex, and Ambiguous) or – even worst – BANI (Brittle, Anxious, Nonlinear, Incomprehensible), the overall feeling is that traditional engineering approaches, based on the positivistic assumption of linearity and rationality, are falling short. This is leading to an increasing demand of alternatives, with at least enough explanatory power to help navigate uncertainty and recognize the most pressing risks.

In particular, extreme events recently surged in the agenda of policy- and decision-makers. Extreme events are involved in climate-related disasters, like acute droughts or foods, but also in epidemiological superspreading events, or in social uproars like the Capitol Hill “incident”, or in companies’ sudden crashes following social polarization. And the list can go on.

‘Let us in!’: What happened after Trump told his supporters to swarm the Capitol (NBCnews.com)

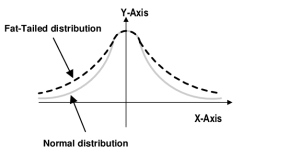

In theories dominated by “gaussian”, “normal” probability distributions (where the mean should be expected and whatever is outside would almost never occur), extreme and unforeseen events are weird oddities. Strokes of very bad luck. Other theories, more complex and complete, are necessary to unravel and prepare for such phenomena.

Complex systems and endemic risks

Complex systems science understands non-gaussian threats as products of “fat-tailed” distributions. It means that, remarkably, the probability of extreme events to happen in many complex systems is not negligible but is an actual risk. Such systems are characterized by multiple interconnected components and virtuous or vicious feedbacks among them.

What does it mean? That in an intertwined world, where various actors share information, resources and actions among each other, the effect of A on B can enhance that of B on A, and further on with accelerating speed.

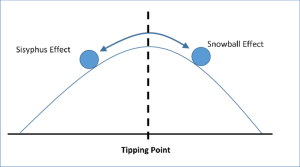

There is more: often, such complex systems display “tipping points” that, if reached, can result in abrupt large-scale losses of well-being or resilience of a system, be it an ecosystem or an economic system such as a corporate. Once again, this is the result of complex interconnectedness and feedbacks, that ought to be recognized to augment preparedness against such risks.

The sudden synchronization of swarming systems

As an example, consider the sudden synchronization of swarming systems. Take a group that behaves totally disentangled, each individual acting on its own (e.g., following alternative ethics). However, in time, the degree of cohesion and agreement may increase – for any reason -, letting minds flickering around previous believes and those of their peers.

As the degree of cohesion reaches a “tipping” value, aligning becomes natural, and the swarm synchronizes around a common idea.

The behavior of the group thus suddenly changes, and the change is not a matter of chance but the opening of new possibilities, unforeseen by traditional, linear paradigms.

What was potentially beneficial in the example above may instead be dangerous if such unexpected tipping points disrupt, for instance, the dynamics of a corporate. Take Amazon: it built its wealth by a virtuous circle – customer care inducing customers buying, further propelling care and personalization and so on. Should one of those elements fade – not even vanish, but weaken under a certain threshold -, the whole circle could turn into vicious, and a crash could occur.

Another example. In the case of social polarization, social media algorithms play a pivotal role. If a person likes A and searches for that, it will be presented with similar contents, thus fostering their interest on A and getting even more suggested content, that strengthen previous believes. Despite being inoffensive when suggesting puppy videos, this mechanism fuels conspiracy theories after search engines or AI responses from users’ prompts. “Eco-chambers”, “filter bubbles” – they all emerge from these mechanisms, leading to polarization of citizens’ and customers’ behaviors. And this occurs rapidly, in a mistakenly unexpected manner.

The examples can go further, involving natural systems, social dynamics or financial network. In general, when such cascading effects are active, what wrongly appeared as a far risk may result in a critical reality.

Strategies for risk mitigation

Unfortunately, a false sense of security is often built during times of relative calm. Although natural, its collapse can have severe strategical and psychological consequences. Rising awareness that extreme and critical phenomena are potentially engraved in the mechanisms of complex systems, rather than being “black swans” out of pure misfortune, provides preparedness and means to counter them.

Acknowledging and mapping the aforementioned risks can enable better mitigation strategies and advanced preparedness. When executives complement their expertise, usually derived from day-to-day decision making and calm periods, with features of complex systems, it is possible to improve both safety and adaptability.

For instance, identifying the networked structure of a company, its supply chain or its impacts on society allows to identify sensitive nodes and increase the robustness of the system as a whole.

Another mean of buffering uncertainty and mitigate extreme risks – which come by definition with large expenses – is to strengthen core values and brand identity. This way, an additional layer is added on top of the product-solution network. Often, such layer is more resilient, as it relies on slowly built trust, is less prone to feedback dynamics (usually, the ethos of a company is built upfront and one-sided) and tends to survive flash crashes. A solid basis can manage to absorb internal and external shocks.

An increasing number of natural, social and economic systems are recently being recognized for displaying extreme, “fat tail” events due to their complex nature. Embracing a mindset that differs from the typical linear and additive approaches, but considers the complexity of a vast array of systems, may provide a competitive advantage when facing uncertainty, both in terms of growing awareness and preparedness, and of tools to address unforeseen issues. The time is now ripe to transfer the academic knowledge to strategic decision-making, towards better risk-mitigation efforts.