A Modern AI-Fable: From Criti-Hype to Hype Hopping to Big Bang.

Attack, apocalypse, disaster, extinction, dystopia, take-over, implosion and the latest traumatic scenario of total eclipse: ChatGPT collapsing by eating itself . Is the Ouroboros of innovation biting its own innovative tail? A modern tale? Maybe not that modern…

In our last article of our “Little Disasters and Catastrophes” Summer Series, we have a closer look at the Disaster Business.

How it has morphed into a highly effective business model. Especially with artificial intelligence, AGI, and lately genAI.

Using fear like fuel and orchestrated by a “magic circle” that reaches far beyond mere tech gurus. The business practice and cash generating model has its own name: criti-hype.

The Art of Criti-hype by Lee Vinsel

In his article “You’re Doing It Wrong: Notes on Criticism and Technology Hype” Lee Vinsel coined the term criti-hype.

Beyond being an ad hoc phenomenon, criti-hype is described as a well-oiled insidious mechanism involving many players. Its radius is far-reaching; from self-driving cars, genetic engineering, the “sharing economy,” blockchain, cryptocurrencies, boosting genAI, NFTs, the metaverse, or plain old AI, anything “that will lead to massive societal shifts in the near-future.”

Its about criticism that both feeds and feeds on hype as criti-hype, a term Vinsel finds “both absurd and ugly-cute.”

Basically Vinsel defines criti-hype as

“the kinds of critics that I am talking about invert boosters’ messages — they retain the picture of extraordinary change but focus instead on negative problems and risks.

Simply put: you hype and then criticize the hype. Recognize the idea?

Let’s take Geoffrey Hinton, the “godfather” of AI. First hyping the AI as an all-time solution. His most memorable hyper hype about radiology and deep learning at the 2016 Machine Learning and Market for Intelligence Conference in Toronto.

“We should stop training radiologists now. It’s just completely obvious that within five years, deep learning is going to do better than radiologists.” YES, “stop training radiologists”!

Now, 7 years later, Hinton’s version is radically different. He warns humanity about the danger of AI. He also warned about the longer-term risks associated with the possibility of an AI becoming more “intelligent” than humans.

All those claims are deeply damaging and causing societal harm. Maybe not immediate but latent harm. With consequences seen later. In ethics such outcomes are referred to as “ethical debt”. Harm is done now with major impacts felt decades later. Aren’t we facing the same scenario with ChatGPT, genAI and the reckless AI race between Big Tech unleashing immature and potentially harmful products on the market at high speed?

Such criti-hype claims have become so coming that we tax them as statements or professional forecasts by industry giants. But they are not!

“A.I. Poses ‘Risk of Extinction,’ Industry Leaders Warn” where leaders from OpenAI, Google DeepMind, Anthropic and other A.I. labs warn that future systems could be as deadly as pandemics and nuclear weapons.” – no rare headline these days. Apocalypse being totally banalyzed and mainstreamed.

Relevant is the insight by Professor Daniele Faccio, a Royal Academy of Engineering Chair in Emerging Technologies and Professor of Quantum Technologies at the University of Glasgow, when pointing to the real risk below the hype: misinformation spread by AI systems and creation of synthetic false data.

“I think that misinformation and the hype around some aspects of AI do not pose significant risks, or at least, not any more so than the risks posed by any other misinformation spread though social media for example. However, we might want to be concerned about the misinformation that AI itself could start to spread on social media.”

Hype: Science fiction or a new business model?

Did we mislabel the oracular statements and mistake them for forecasts instead of fictitious outbursts by hyped entrepreneurs, scientists, ML engineers?

Did we misplace the cursor? Or were we influenced, persuaded to do so?

Chronic “hype hopping”?

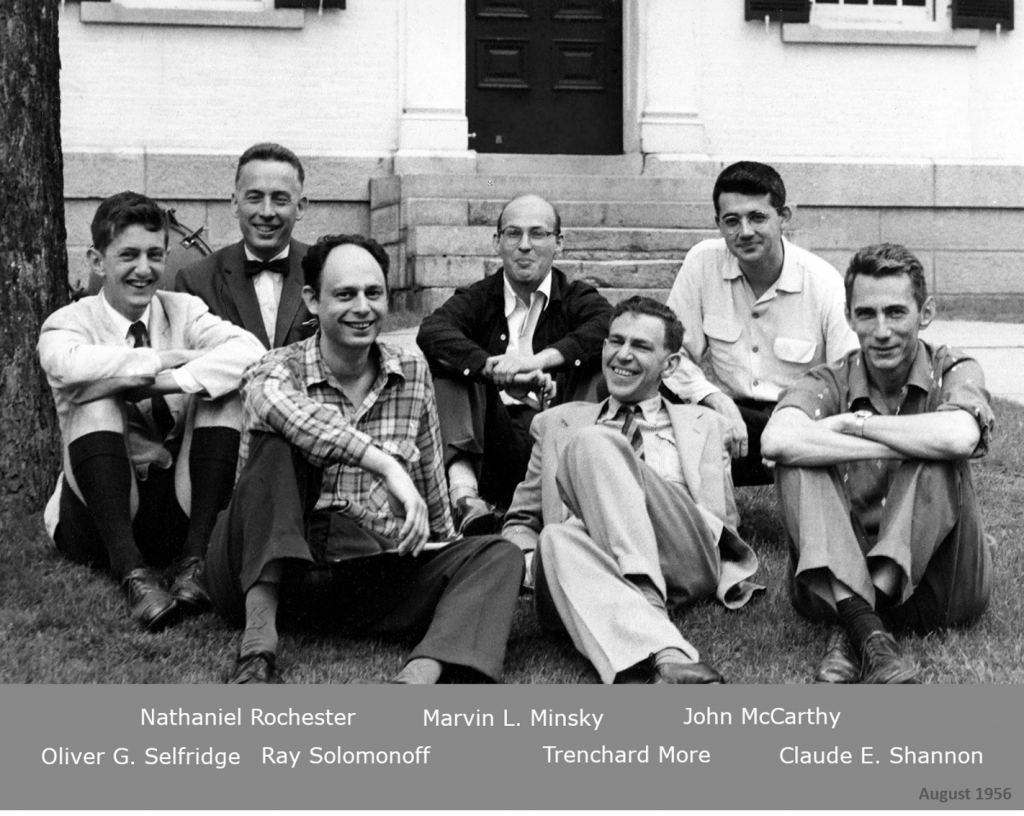

The 1958 New York Times article “New Navy Device Learns by Doing could quite well be an indicator of a longtime misplaced cursor.

“The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.”

Instead of being scientifically proved, those hyped statements lean more towards ad hoc constructed prophesies than scientifically proved projections. A perfect example of decade-long “hype hopping”.

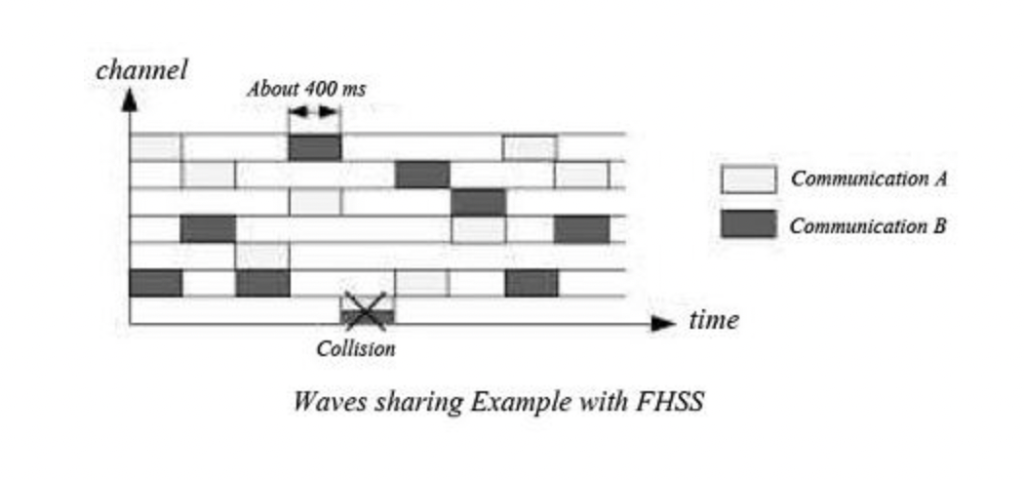

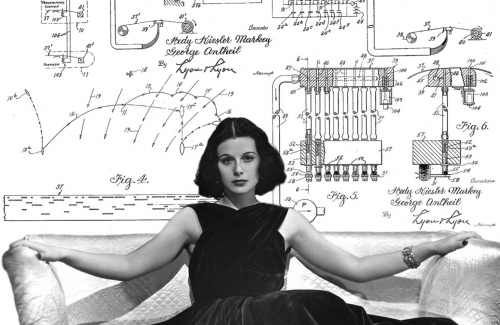

We use the term “hype hopping” in reference to Hedy Lamarr, the inventor who holds the patent for frequency hopping which is used in wireless communications today like WiFi and Bluetooth. With her collaborator and pianist George Antheil, Lamarr came up with the idea of frequency hopping for German torpedo deviating during WWII. Hedy Lamarr was one of Hollywood’s most popular actresses, and one of science most unknown inventors.

We use the term “hype hopping” in reference to Hedy Lamarr, the inventor who holds the patent for frequency hopping which is used in wireless communications today like WiFi and Bluetooth. With her collaborator and pianist George Antheil, Lamarr came up with the idea of frequency hopping for German torpedo deviating during WWII. Hedy Lamarr was one of Hollywood’s most popular actresses, and one of science most unknown inventors.

Timeless, isn’t she, isn’t it? Hype hopping. Musk, Altman, Pichai and consort, all excel at it…

In his article Lee Vinsel points to the professionalisation of criti-hypers and even calls the method an “academic business model”. Often funded with money from private foundations, para-governmental institutions and the digital technology industry itself.

“Criti-hype indeed challenges senseless claims about powers of new technologies, including Evgeny Morozov’s pioneering work on “solutionism”; Meredith Broussard’s questioning of artificial intelligence; Morgan Ames’, Christo Sims’, and Roderic Crooks’ critically examining claims around “EdTech”; Sarah Roberts’, Tarleton Gillespie’s, Mary Gray’s, Siddharth Suri’s.”

The Ouroboros Disaster Cycle

The criti-hype practice is nothing new to the digital age, nor specific to AI or technology. The systemic practice has been ongoing for decades. Vinsel reports:

“For example, in its 2017 report, the AI Now Institute, which is associated with New York University, paraphrased another report from the consulting firm McKinsey claiming that 60 percent of occupations would have 1/3 of their activities automated.

This would be an enormous increase in productivity from a single set of technologies, probably one of the largest in history. These claims precisely mirrored the advertising digital technology firms were putting out as well as visions coming out of organizations like the World Economic Forum that we were on the cusp of a “Fourth Industrial Revolution.”

Remarkable insights since today we still see the modus operandi with the latest reports by McKinsey, Deloitte, Accenture solidifying their tremendous investments in AI by nicely oriented reports.

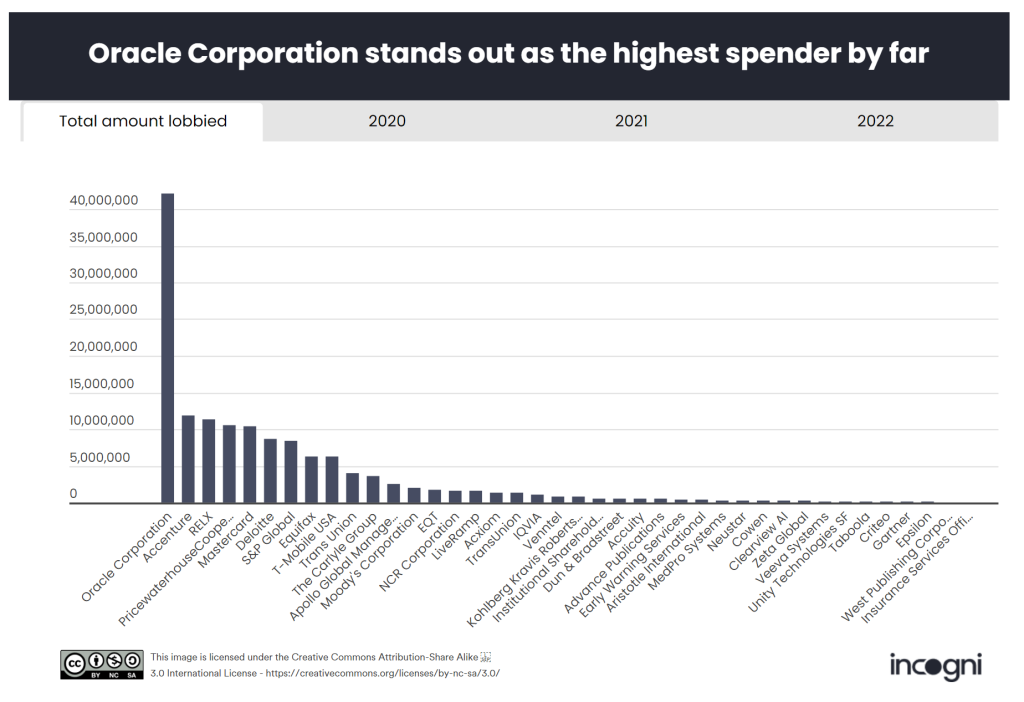

The following chart on databrokers lobbying money is highly revealing. Just check out the biggest spenders…

After Oracle, Accenture is massively investing into AI. Over $3 bn and building a genAI system to consult (they use the term “guide”) their clients!

After Oracle, Accenture is massively investing into AI. Over $3 bn and building a genAI system to consult (they use the term “guide”) their clients!

“The company also said it is rolling out a new platform called AI Navigator for Enterprise, a generative AI-based platform, which will help guide clients through building and using AI in their businesses.

“Over the next decade, AI will be a mega-trend, transforming industries, companies, and the way we live and work, as generative AI transforms 40% of all working hours,” said Paul Daugherty, group chief executive, Accenture Technology.

Criti-hype enablers play a crucial part alongside criti-hype claimers. The link between investing huge amounts of money, thus augmenting the hype to create the need, and rapidly deflating the hype by alarming statements about its risk – with risk management being one of their highest profit businesses; and overlapping into other cross-industry-wide practices. Undoubtedly, risk management is one of the biggest winners of this systemic Ouroboros Disaster Cycle.

Why is criti-hype insidously dangerous and ethically foul?

Why dangerous and foul?

First, because the inflated narrative influences our perception and behavior. Thus our ethical stamina. In “You Are Now Remotely Controlled”, Shoshana Zuboff, author of “The Age of Surveillance Capitalism” and emerita professor of Harvard Business School, does practice criti-hype on criti-hype. Sort of para criti-hype, exponential criti-hype. The fractal effect is endless.

Pointing to how we are remotely tuned and controlled by leading tech companies. With little scientific proof for her plausible statements, the claims are also criti-hyped at will. Recent studies, for example on polarization by Joanna Bryson and colleagues, have shown how science fails to find correlations between social media use and affective polarisation. The correlation is rather to be found in economic precarity.

Second, because it distracts us from the real harms, deeper fallacies and real world problems that impact people right now. Like Sam Altman’s latest cryptocurrency, highly unethical Worldcoin ID, biometric eye-scanning project. It’s happening right now and here. And it has been flying under the radar for years.

Third, because it is creating a hostile and anxious atmosphere. With new social diseases like “AI anxiety” where workers, and people fret over an uncertain future caused by unforeseeable and ubiquitous AI as an existential threat.

Fears being inflated by “professional” forecasters à la Goldman Sachs projecting a “significant disruption” of the labor market with “some 300 million jobs being eliminated by generative AI”, one of many studies published in March. Those apocalyptic predictions are no less founded than the astronomical productivity boom predicted by genAI and the use of conversational chatbots like ChatGPT.

So tech-hype and tech-criticism go hand in hand. They are two sides of the same AI marketing coin. How can ethics help? By creating useful information channels and networks of reliable, independent industry professionals. By unfolding reliable and responsible frameworks built on collective and decentralized ethics like Swarm Ethics™.

For this entire novel business architecture, the notion of intellectual and financial “independence” will be critical and crucial.

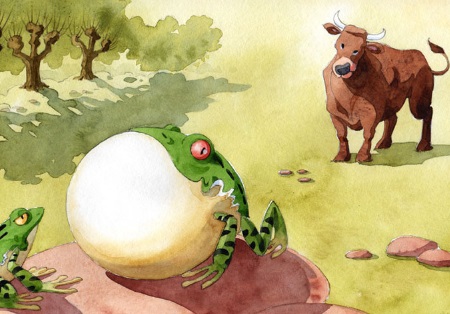

Nevertheless the criti-hype phenomenon reminds us of Edmond de la Fontaine’s fable “The frog who wanted to be as big as an Ox” – “La Grenouille qui se veut faire aussi grosse que le Bœuf”. Why? Because it all ends with a Big Bang.

Nevertheless the criti-hype phenomenon reminds us of Edmond de la Fontaine’s fable “The frog who wanted to be as big as an Ox” – “La Grenouille qui se veut faire aussi grosse que le Bœuf”. Why? Because it all ends with a Big Bang.

“Little Disaster and Catastrophe” Summer Series / D. Proverbio / E. Nadir

Katja Rausch is specialized in the ethics of new technologies, and is working on ethical decisions applied to artificial intelligence, data ethics, machine-human interfaces and Business ethics. Katja is a linguist and specialist of 19th century literature (Sorbonne University). She also holds a diploma in marketing, audio-visual and publishing from the Sorbonne and a MBA in leadership from the A.B. Freeman School of Business in New Orleans. In New York, she had been working for 4 years with Booz Allen & Hamilton, management consulting. Back in Europe, she became strategic director for an IT company in Paris where she advised, among others, Cartier, Nestlé France, Lafuma and Intermarché. The proposed concept of swarm ethics evolves around three pilars : behavior, collectivity and purpose Away from cognitive jugdmental-based ethics to a new form of collective ethics driven by purpose.

![]()

For over 12 years, Katja Rausch has been teaching Information Systems at the Master 2 in Logistics, Marketing & Distribution at the Sorbonne and for 4 years Data Ethics at the Master of Data Analytics at the Paris School of Business.

Author of 6 books, with the latest being "Serendipity or Algorithm" (2019, Karà éditions). Above all, she appreciates polite, intelligent and fun people.