The 3 Laws of Robotics by Asimov

The 3 Laws of Robotics by Asimov

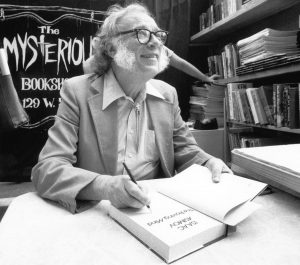

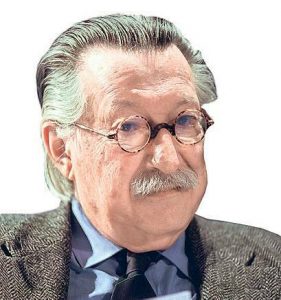

Isaac Asimov - Science-fiction author

Isaac Asimov was a Russian born author, who grow up in Brookly, became professor of biomedecine at the University in Boston, and quit to become a full-time science-fiction writer.

The term “robot” itself is borrowed from another writer, Havel Capek, who wrote in 1920 a theater play called R.U.R. Rossum’s Universal Robots.

In 1950 Asimov published the now referenced opus I, Robot when mentioning the Three Laws of Robotics or The Three Laws of Asimov.

I, Robot is a collection of 9 short stories. All involve robots behaving in unusual and counter-intuitive ways (for robots) because they are conscious, have emotions and follow an ethical code.

Nowadays The Three Laws of robotics are not more nor less than an ethical code for roboticists, designers, engineers or anybody involved in AI.

It is quite remarkable and fun to see, that the entire robotics mouvement is semantically based on literature, on science fiction.

I,Robot, the book in which the 3 Laws are stated has a rarely mentioned sub-title which is utmost telling :

” The Day of the Mechanical Men – Prophetic glimpses of a strange and threatening tomorrow.”

3 Laws of Asimov - 3 Laws of Robotics

In the short story Runaround, Asimov lays out the 3 fundamental laws of robotics

Three Laws of Robotics

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Later, a 4th law was added by Lyuben Dilov, Bulgarian philosopher

4. A robot must establish its identity as a robot in all cases.

And a 5th law by Nikola Kesaroski, a Bulgarian science fiction writer based on his book The Fifth Law (1983)

5. A robot must know it is a robot.

The origin of the word "robot" from Karel Capek, another science fiction writer

“Robota” in Czech meaning “servitude,” “forced labor” or “drudgery. The main idea of Capek’s work being that the robots are meant to complete the human’s work. Until they wake up and rebel. R.U.R. was a theater play that premiered in 1920.

R.U.R. tells the story of a company – Rossum – using the latest biology, chemistry and physiology to mass produce workers who “lack nothing but a soul.” The robots perform all the work that humans preferred not to do and, soon, the company is overflown with orders.

In early drafts of his play, Čapek named his machines labori, after the Latin root for labor, but worried that the term sounded too “bookish.”

At the suggestion of his brother, Josef, Čapek ultimately opted for roboti.

Is the hype with robots-something modern ?

Definetely not.

Before the word robot was used, the idea of an autonomous or mechanical entity was already present.

The Antique world was fascinated by artificial beings. In Greek mythology, the God of Fire, Hepahistos creates Talos a bronze giant. In the Renaissance, Leonardo da Vinci designs a robot that moves its arms and head and stands up…

There were several ways to express the idea of “mechanical” constructions. The earlier concept of artifial beings was not centered on intelligence but rather on the mechanical side.

- Android (13th century) – is a humanoïd robot

- Automaton (1611) – self-operating machines such as mechanical humans, mechanical toys, moving astronomical models and mechanical musicians

- Robot (20th century Čapek) – is an autonomous, non-biological (i.e artificial) entity

- Cyborg is an entity that combines artificial and biological components

Updating, upgrading Asimov?

Are nowadays machines compatible with Asimov’s texts?

To shape an answer, it is useful to categorize robots by their use: service robots, industrial robots and military robots.

It seems clear that a Roomba vacuum cleaner does not need to be considered as a potentially harming robot. If so, a car or a dish washer needs to be too.

On the other end of the spectrum, however, there are the military robots. These devices are being designed for spying, bomb disposal or load-carrying purposes, all in the name of saving, protecting and defending a country, its people.

But the problem here is that defending from the one side looks like attaking from the other. If spying or killing should not be allowed, it is however the first necessity in some cases for protecting. And if they get hacked, is it ethical? Is it still hacking or a good action for the right cause? So hacking is not alsways a criminal act? That’s at least what the military or political dignitaries state.

Code vs Heart

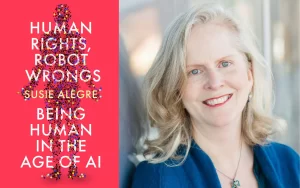

Anyhow, what does to harm mean? Can there be physically harm or psychological harm when it comes to robot companionship, for example?

In the Spike Jonze’s movie HER, Theordore, a webdesigner falls for an AI.

She communicates only by voice (Scarlett Johansen). Joaquin Phoenix slowly but surely falls in love with this vocal, funny, spiritual presence which is always equal to herself.

At first, everything is fine. They have fun, laugh, ask each other about their day, like a real couple. The AI even tells him jokes to cheer him up, like, “Who’s my dad?” – “Data.”

The romance works well. Then gradually, Theodore understands that he is not exclusive for the AI program.

For him a real romance, for the AI, only raw data. It is Theodore’s interpretation that turns words pronounced into flirtation, into romance.

He is just part of an input/output romance. On the one hand a word out of a database pronounced, on the other hand, words felt and understood triggering feelings, hope and ultemately dispair.

So did the AI harm him? Who is responsible?

The human-machine interfacing is a complex question.

Joe Weizenbaum, the brilliant MIT professor and inventor of the first chat bot Eliza was shoked back in 1966, when he saw how fast and deep people fell for his little chatbot program based on very simple coding.

Joe Weizenbaum, the brilliant MIT professor and inventor of the first chat bot Eliza was shoked back in 1966, when he saw how fast and deep people fell for his little chatbot program based on very simple coding.

His colleagues at MIT, even his own secretary, considered the program as equally reliable as a human. A real shock for the scientist who conceived Eliza more like an exercise, almost a coding joke. The name Eliza was chosen in reference to Eliza Dolittle of the musical My Fair Lady.

A question of consequences and responsibility

Eliza’s coding was very simple, but its psychological impact on people was considerable. The prelude for our continuous interaction with phones, tablets, computers, apps and any other devices.

In each interview, Weizenbaum insisted on the only regret in his life: to have invented Eliza. And thus opended the Pandora’s box. He felt responsible.

Until his death, he was a strong advocate for the importance of responsibility in any act of creation, development or decision.

Were is responsibilty with robots?