The Cultural Basis of Ethical Choices

The Cultural Basis of Ethical Choices

An online quiz popularized the “Trolley Problem”, focusing on autonomous cars and proposing multiple nuanced scenarios. The results are impressively different, depending on the country and cultural heritage of respondents.

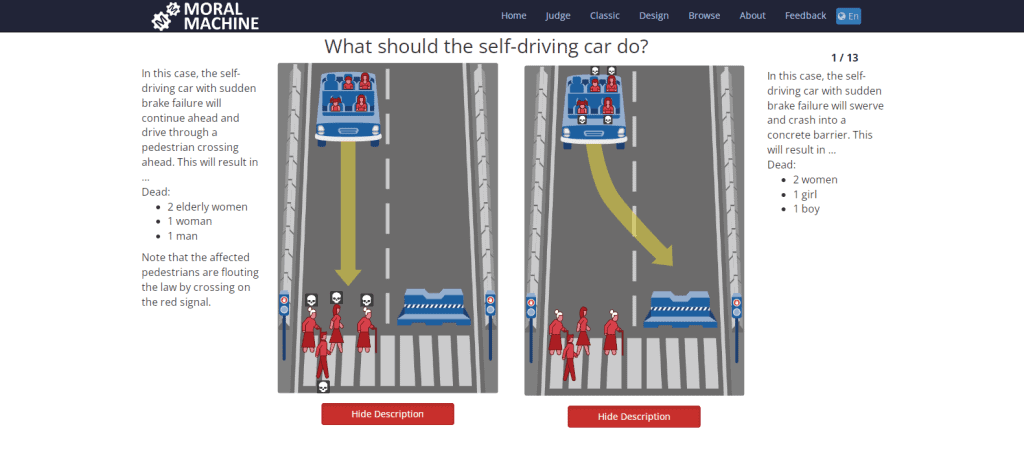

Focus on the online “Moral Machine”, a “platform for gathering a human perspective on moral decisions made by machine intelligence” (original definition www.moralmachine.net).

A brief recap of the "Trolley Problem"

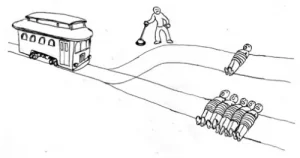

The “Trolley Problem” is a series of ethical dilemma, presented as thought experiments, concerning the actions to take when multiple lives are at stake.

In its original formulation, a runaway trolley or train is bound to crash onto a group of people chained at the rails. A bystander may trigger a switch and divert the tram towards an alternative rail, where lies a second set of people (less individuals, a person dear to the bystander or else). What would the bystander choose?

Albeit seemingly farfetched, the problem is now a classic problem for autonomous cars.

In case a car encounters an unexpected event – a child suddenly crossing the road, for instance – which decision should it take?

Save the child despite crashing against an incoming car? Safeguard the “public” and run over a single child? And what if other options are included into the scenarios, such as lawful behavior of the people at risk, or age, or number of people involved, or animals, etc.?

As plausible answers impact regulations for autonomous cars, including civil and penal responsibility of owners and manufacturers, settling the question is a matter of actionable ethics.

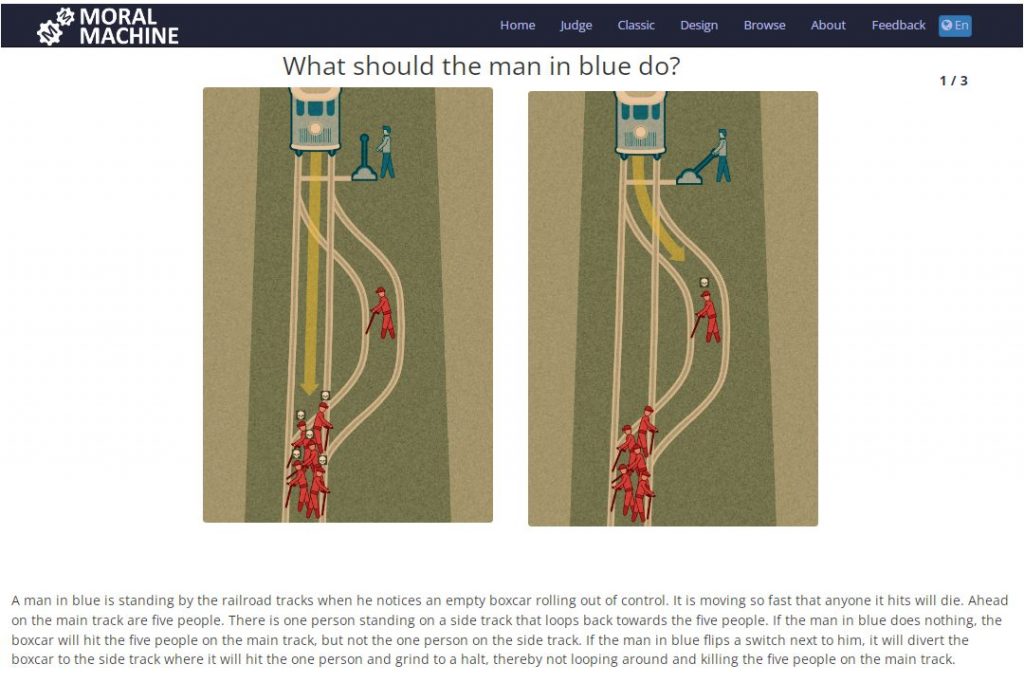

The social experiment

Launched in 2016 and still running, the Moral Machine quiz and social experiment wishes to capture trends of opinions throughout the world.

Moral Machine was developed and run by a consortium of Universities (MIT, University of Exeter, University of British Columbia, Toulouse School of Economy and the Max Planck Institute for Human Development), in tandem with ethicists and experts of artificial intelligence.

Its purpose is not to derive templates for ethical rules, but rather to gauge the spectrum of choices that are popular in each country.

Although the debate is left to experts and decision-makers, such study can provide a “thermometer” of what could be more or less accepted by local societies.

Or, potentially, to observe whether there are regional, continental or global patterns.

The resulting paper, published in 2018, well describes the variety of answers and the cultural clusters that emerged from the study.

At a macro level, the authors identified three macro-clusters: Western, Eastern and Southern, segmented by the similarity scores associated to the answers.

The cluster tags derive from the fact that, except for few exceptions (e.g. Andorra belongs to the “eastern” group), most countries within a cluster could be also grouped geographically, indicating overall cultural proximity.

Three cultural blocs for ethical choices

The aggregated results show that the “Western” block would prefer inaction, or sparing the more or the younger.

The “Eastern” block has a skewed preference in saving pedestrians or lawful citizens, while the “Southern” group would tend to spare females, young people or those with higher status.

The first two blocks would also prefer to save humans, while “Southerners” do not consider that as a priority feature.

Breaking down to individual countries also reveals striking differences within and across blocks. For instance, data from the US reveal higher tendency at saving the young and fit; China would prioritize women and elderly; Russian data, instead, show higher preferences for sparing males, while Italians would rather spare the youngest.

Using similarity scores, it is also possible to determine which countries are “ethically” more similar. Some results could be expected (e.g., Italy and Spain, Argentina and Uruguay, or the UK and Australia). Other results may be more surprising, such as Malta and Mongolia showing “ethical proximity”, or Lithuania and Nicaragua.

Because of such unintuitive outcomes, the large but potentially biased number of respondents, and the limited number of scenarios inquired, the study is not to be considered conclusive.

However, the striking amount of data collected in what is the first worldwide data-driven socio-ethical experiment sets a non-negligible basis for discussion.

Some Take-home Messages, and the Road Ahead

Regardless of the single results, two main considerations can be drawn for the Moral Machine experiment.

First Consideration

First, that cultural traits possess the capacity to strongly influence ethical choices, across various countries. The responses to the dilemma show clear variations, that must be considered when discussing such matters, both as thought experiments and as actionable decisions to implement algorithmic choices.

Depending on nuance interpretations, these results can even be used in favor or against the proposal of equipping autonomous cars with “tolerance” toggles (that is, allowing the user to decide the level of “how much a car should prioritize a decision or another”).

As scenarios are manifold and ever-changing, it may be extremely complicated to make decisions a priori and to forward responsibility to the drivers – especially when considering cultural, geographical and moral values.

Second Consideration

Nonetheless, a second consideration points out that looking for common basis is not doomed from the start. The identification of clusters, displaying similarities at the macro level, may spur consensus-seeking efforts across numerous countries.

What would happen for tourists, though, is something to further reflect upon.

Overall, the experiment shows that ethical choices are far from shared and monolithic, but are vastly relative to culture, social norms and ethnical background.

Establishing accepted ethical guidelines and, subsequently, civil laws is a matter of continuous debates within and across cultures – strenuous life-long efforts rather than absolute principia.

The next question is, therefore, if ethical matters should remain represented by expert panels, or if the public should have a direct voicing, through polls or other means.

Surely, even though ethical norms are likely conventional and consensual, the rule of majority is often too simplistic to provide adequate proxies and guidance.

But can a “data-driven ethics” play significant roles in shaping conventions and consensus in the future?

- Director of Interdisciplinary Research @ House of Ethics / Ethicist and Post-doc researcher /

- Latest posts

Daniele Proverbio holds a PhD in computational sciences for systems biomedicine and complex systems as well as a MBA from Collège des Ingénieurs. He is currently affiliated with the University of Trento and follows scientific and applied mutidisciplinary projects focused on complex systems and AI. Daniele is the co-author of Swarm Ethics™ with Katja Rausch. He is a science divulger and a life enthusiast.

-

In our first article of two, we have challenged traditional normativity and the linear perspective of classical Western ethics. In particular, we have concluded that the traditional bipolar category of descriptive and prescriptive norms needs to be augmented by a third category, syngnostic norms.