There are several ways to obtain an “artificial intelligence” (AI), and each one is associated with different degrees of control and predictability, with immediate ethical implications.

Getting started: this “intelligence”, those “intelligences”

The rest of the article builds upon the definition of “intelligence” as “the ability of solving non-trivial tasks”. For the moment, we do not further inquire what “non-trivial” could mean, but we leave it to the intuition of the reader. Not only this allows the article to be shorter than four monographies, but it also reflects the current lack of precise and world-wide shared definition of such concept. The same way, we restrict ourselves to considering a working definition of intelligence rather than pursuing an all-encompassing one – which arguably does not yet exist.

From the current state-of-the-art of computer sciences, there are about three methods to create something that satisfies the above definition of AI: providing step-by-step instructions; stating a desired objective and demanding the “intelligence” to get as close as possible, given its capabilities; instructing many simple units to perform trivial tasks and to cooperate, so that the solution of the non-trivial task “emerges” from the collective behavior.

Hence, from a practical perspective, we speak of “artificial intelligences”. Not only they mark the perimeter of what is currently possible to create – and to debate upon –, but they are associated with different implications towards society. Let us see them.

The procedural “intelligence”

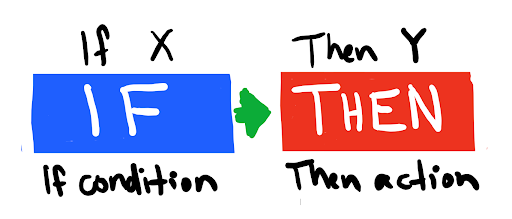

The first method, although sometimes overlooked, is still the most common: it corresponds to the “classical” way of procedural programming. To obtain “intelligent” algorithms, the informaticians program explicit rules that are subsequently executed.

Usually, they roughly correspond to long chains of “if… then do… else do…”. An example could be “if provided with these Chinese symbols, then return that answer”.

Hence, it states “how” to achieve the objective, but leaves the “what” as a result. To obtain complete AIs, it is in principle demanded that programmers are able to forecast all possible instances that the program is likely to encounter.

Given a mighty library of actions, a third-party beholder could not be able to distinguish a hard-coded intelligence from a genuine one.

The advantage of such method is its high level of predictability, which makes it easier to control the outcomes. This has consequences for the accountability of undesired behaviors.

Machine and Deep Learning: the trendy AI

The second method encompasses the current trend of AI: machine learning (ML), deep learning (DL) and everything that is directly associated to them. Both ML and DL are recognizable for the absence of explicit rules. What is demanded to the algorithm is to approximate a desired output, given multiple input data, by following IT and mathematical procedures.

The way this is generically achieved, and whether it corresponds to what we know of the human brain, will be discussed in further articles. The key point is that there are no explicit instructions given, but a set goal.

The programming procedure is all about stressing the “what to achieve” rather than the “how to achieve it”. There exists a sub- difference between ML and DL, in that the first is initially guided by a human selection of notable “features” (data characteristics or statistical properties), whereas the latter is given full capability of selecting the features by itself.

The immediate advantage of this method is its astonishing flexibility when dealing with new observations, as well as its ability to return statistical connections among features that might be novel to human observers. On the other hand, since the “how to achieve the objective” is less controlled, it is generally complicated to argue the “reason why” a certain output is delivered.

There are currently two contrasting research directions: one is trying to make the algorithms more transparent and explicable, the other is recognizing more and more bias in their outputs – mostly, but not only, connected to the input data.

Despite the recent advances, it is still uncertain if and when such issues will be solved in a satisfactory way. Immediate ethical consequences stem from these facts: are we able to predict, in any situation, the outcome of an algorithm? Who is responsible for undesired behaviors? Should we keep updating the algorithms with new data, even after their market release? Could machines supported by such algorithms have rights, given that they might be associated some sort of conscience?

“Emerging” and collective intelligence

The third method is perhaps the less known to the general public. Its funding idea is based on the notion of “collective and emerging intelligence”, and its inspiration mostly comes from swarms. This is the method of multi agent systems, which is almost as old as that of Machine Learning.

The reasoning behind is the following: many complex systems display rich and variegate behaviors when studied as a whole, at the population level, despite single units being rather simple and mechanic. Referring to the insects’ swarm example, it is well known that individual mites do not possess spectacular levels of intelligence, but the whole colony is able to self-coordinate and to build impressive architectures.

Multi agent systems are thus composed by single computing units – the agents – embedded in a colony. Each agent can be arbitrarily complex in its internal programming, ranging from purely reactive ones (“if this is observed, then do this”), to processing schemes that mimic what is hypothesized by cognitive psychologists (e.g., the belief-desired-intention (BDI) architecture). On top of that, agents are coupled with varying numbers of other agents with which they can interact.

The rich dynamics that emerge from such interactions leads to the eventual solution of “non-trivial tasks” like airport traffic control, management of biddings or robot distributed control.

Although potentially extremely versatile, this method is prone to the very existence of emerging behaviors, which could be less predictable and might diverge from the desired output in unknown environments. This opens new ethical questions related to the concept of emergence, of predictability and control, and of the dichotomy individual/collective.

So, what are we facing today?

All three methods can of course be combined to further develop complex, versatile – but arguably less predictable – systems.

However, a practical question for managing ethics and regulations could be: “which kind of system are we facing”?

Insofar, we have described a “bottom-up approach” for talking about AI: facing a black page and deciding which method to employ to develop an intelligent system

On the contrary, it could be extremely difficult to face an unknown system and infer its architecture which, as described above, can be different consequences for ethics and legislation. For a fruitful debate on the short/mid-term impacts of such technologies, a better understanding of their underlying methods is thus recommended.

- Director of Interdisciplinary Research @ House of Ethics / Ethicist and Post-doc researcher /

- Latest posts

Daniele Proverbio holds a PhD in computational sciences for systems biomedicine and complex systems as well as a MBA from Collège des Ingénieurs. He is currently affiliated with the University of Trento and follows scientific and applied mutidisciplinary projects focused on complex systems and AI. Daniele is the co-author of Swarm Ethics™ with Katja Rausch. He is a science divulger and a life enthusiast.

-

In our first article of two, we have challenged traditional normativity and the linear perspective of classical Western ethics. In particular, we have concluded that the traditional bipolar category of descriptive and prescriptive norms needs to be augmented by a third category, syngnostic norms.